This blog post was originally published at http://blog.aditi.com/cloud/adopting-event-sourcing-saas-windows-azure/.

Event Sourcing

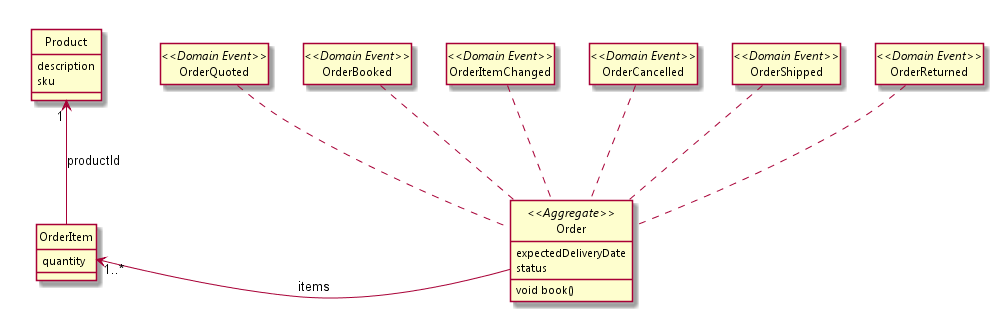

Let us take an order management SaaS system as depicted in the below figure.

Assume that Order is the main entity (in DDD world, this is further specialized as “AggregateRoot” or simply “Aggregate”). Whenever, a request to make an order in this system through service layer, a lifecycle of an Order instance will be started. It is started with OrderQuoted and ends with OrderShipped/OrderReturned.

In the typical relational world, we will persist the order instance as

| PKey | Order ID | Status | ModifiedBy | ModifiedOn |

| 1 | OD30513080515 | OrderBooked | Sheik | 2013-06-16 12:35PM |

| 2 | OD20616150941 | OrderShipped | John | 2013-05-22 10:00 AM |

| .. | .. | .. | .. | .. |

If the order OD30513080515 is delivered, then we will simply update the record # 1 as

| 1 | OD30513080515 | OrderDelivered | Milton | 2013-06-18 02:10PM |

The Event Sourcing approach enforces to persist domain object using an immutable schema. In this case, the data store will look like:

| DbId | Order ID | Status | ModifiedBy | ModifiedOn |

| 1 | OD30513080515 | OrderBooked | Sheik | 2013-06-16 12:35PM |

| 2 | OD20616150941 | OrderShipped | John | 2013-05-22 10:00 AM |

| .. | .. | .. | .. | .. |

| 1 | OD30513080515 | OrderDelivered | Milton | 2013-06-18 02:10PM |

You are now under the impression that event sourcing is nothing but audit log and if this approach is taken in the main stream database we will be end up with underperforming query and unnecessary database size. Let us understand the benefits of event sourcing before discussing these concerns:

- Business sometimes needs tracking changes with relevant information happened in the entity during its lifecycle. For example, before shipping the order, if the system allows the customer to add or remove items in the order, “OrderItemChanged” will play important role to recalculate pricing by track back to the previous “OrderItemChanged” events.

- With the immutable persistent model, this would be a fault tolerance mechanisms so that at any point in time we can reconstruct the whole system or to a particular point by rewinding the events happened on a particular entity.

- Data analytics

The above two points keep specifying the term “event”. A business system is nothing but performing commands (technically Create, Update, and Delete operations) on business entities. Events will be raised as a yield of these operations. For example, making an order in the above SaaS system will create an event OrderBooked with following facts:

{

“name” : “orderBooked”,

“entity” : “Order”,

“occrredOn” : “2013-06-16 12:35PM”,

“orderDetail” : {

“orderId” : “OD30513080515″,

“orderItems” : [{ “productId” : “PR1234”, “quantity” : 1}]

}

}

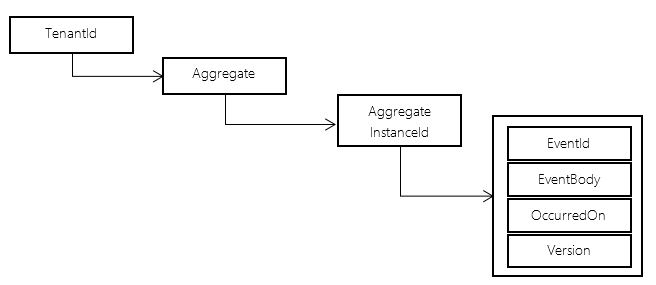

In the distributed domain driven design approach, the above domain event will be published by Order aggregate and the service layer receives the event and publish itself to the direct event handler or via event publisher. One of the main subscriber could be a event store subscriber that persist the event into the event store. The event can also be published to an enterprise service bus so that it can be subscribed and handled by wide variety of other subscribers. Most likely the schema for an event store looks like below:

The various implementations of event sourcing use different terminologies and slightly different schema. For example, main stream event sourcing implementation will have the whole aggregate object itself on every change.

Hence, event sourcing has following characteristics:

- Every event should give a fact about that and it should be atomic

- The data should be “immutable”

- Every event should be “identifiable”

In the SaaS World

By this time, you understand that event sourcing is not “one size fit for all”. Particularly, in the enterprise world. Based on the SaaS system and organization eco system, you can suggest different methodologies:

- Use Event Store as main stream data store and use query friendly view data stores such as document or column friendly databases. This would handle all queries from client systems. This is likely to be CQRS approach.

- Enterprises where you feel relational is the right candidate for main stream database, then use event store as a replacement for audit log, if the system and regulations permit. This would help you to address the use cases where past event tracking is the business requirement.

Right storage mechanism in Windows Azure

When you are building applications in Windows Azure, you have three official storage options as of now. Let us see these a whole:

| S.No | Storage | Pros | Cons |

| 1 | Blob Storage | Flexible and simple to implement the above mentioned schema | Majorly none |

| 2 | Table Storage | Read friendly | Unfriendly for write when you take different serialization approach for event body apart from simple JSON serialization. |

| 3 | Windows Azure SQL | Based on your relational schema, this could be read and write friendly |

Lacks scalability Cost |

Summary

Event sourcing is more than just an audit log that can be well adopted into SaaS system. You should take right approach on how to use this in your system. Windows Azure blob storage is one of the nice option as of now since there is no native document or column oriented database support in Windows Azure.

Few event sourcing frameworks in .NET:

<p>

</p>