This post discusses the performance benefits of effectively using .NET TPL when doing I/O bound operations.

Intent

When there is a need for non-synchronous programming pattern (asynchronous and/or parallel) in Azure applications, the pattern of choice must be based on the target VM size we have chosen for that app and the type of operation particular part does.

Detail

.NET provides TPL (Task Parallel Library) to write non-synchronous programming much easier way. The asynchronous API enables to perform I/O bound and compute-bound asynchronous operations which lets the main thread to do the remaining operations without waiting for the asynchronous operations to complete. Refer http://snip.udooz.net/Hbmib2 for details. The parallel API enables to effectively utilizes the multicore processors on your machine to perform data intensive or task intensive operations. Refer http://snip.udooz.net/HTLrVv for details.

When writing azure applications, we may need to interact with many external resources like blob, queues, tables, etc. So, it is very obvious to think asynchronous or parallel programming patterns when the amount of I/O operations are higher. In these cases, we should be more cautious on selecting asynchronous and parallel. The extra-small instance provides shared CPU power, the small instance provides single core and medium or above provide multicore. Hence, asynchronous pattern would be the better option for extra-small and small instances. For problem those are highly parallel in nature, then the application should be placed on Medium or above instance with parallel pattern.

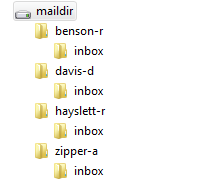

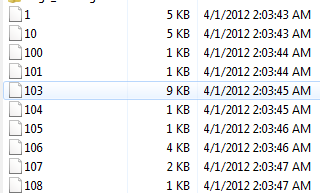

To confirm the above statement, I did a small proof of concept which has high I/O operation. The program interacts with Azure blob to get large number of blobs to get data to solve a problem. I’ve taken a small amount of Enron Email dataset from http://www.cs.cmu.edu/~enron/ which contains email messages for various Enron users on their respective Inbox folder as shown in figure 1 and figure 2.

The above figure shows the “inbox” for the user “benson-r”. Every users have approximately more than 200 email messages. A message contains the following content:

1 2 3 4 5 6 7 8 9 10 11 12 | |

The program going to solve how many times particular user written email to this user. The email messages are resided in a blob container with appropriate blob directory. Hence, the pseudo code is some thing like:

1 2 3 4 5 6 7 8 9 10 11 12 | |

I apply “sync, async and parallel” along with normal Task.StartNew and Task.StartNew + ContinueWith programming patterns on “fetching and parsing email messages” logic (more chatty I/O).

The Code

The normal procedural flow is shown below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

The parallel version is shown below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | |

The asynchronous version is:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 | |

The major difference in the “fetching and parsing” part is, instead of managed API, I have used REST API with a wrapper so that I can access the Blob asynchronously. In addition the above, I have used normal TPL tasks in two different way. In the first way, I just processed “fetching and parsing” stuff as shown below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

Another one way, I have used ContinueWith option with the Task as shown below:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | |

Results

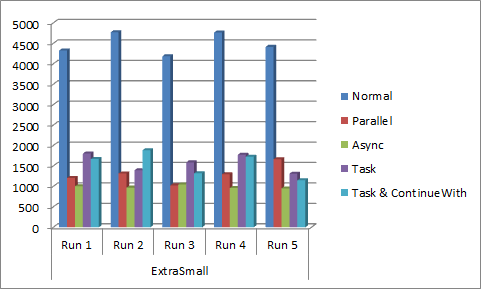

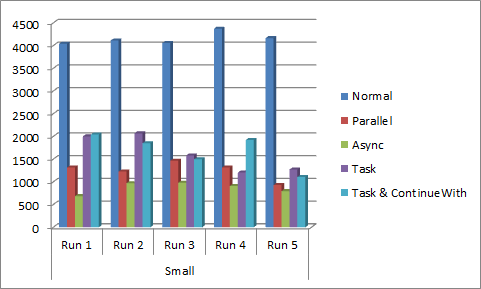

I’ve hosted the work role and storage account at “Southeast Asia”. On every VM size, I’ve made 6 runs and removed the first time result. I have given 12 concurrent connection in the ServicePointManager for all the testing. I did not change this value in medium and large instances. All the results are in millisecond.

Extra Small

| Normal | Parallel | Async | Task | Task & ContinueWith | |

| Run 1 | 4326 | 1209 | 1004 | 1807 | 1671 |

| Run 2 | 4773 | 1319 | 972 | 1399 | 1887 |

| Run 3 | 4189 | 1027 | 1050 | 1590 | 1322 |

| Run 4 | 4769 | 1299 | 964 | 1778 | 1728 |

| Run 5 | 4416 | 1665 | 952 | 1313 | 1150 |

Small

| Normal | Parallel | Async | Task | Task & ContinueWith | |

| Run 1 | 4044 | 1319 | 687 | 2003 | 2045 |

| Run 2 | 4116 | 1229 | 972 | 2070 | 1854 |

| Run 3 | 4060 | 1468 | 981 | 1584 | 1501 |

| Run 4 | 4375 | 1316 | 909 | 1208 | 1924 |

| Run 5 | 4167 | 931 | 797 | 1272 | 1109 |

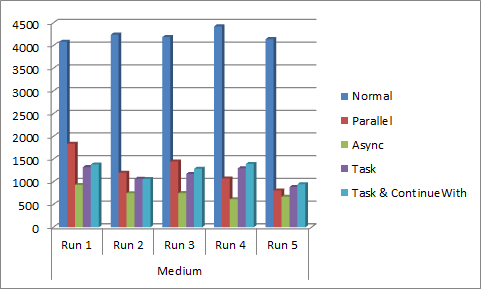

Medium

| Normal | Parallel | Async | Task | Task & ContinueWith | |

| Run 1 | 4086 | 1839 | 933 | 1326 | 1385 |

| Run 2 | 4245 | 1204 | 751 | 1069 | 1064 |

| Run 3 | 4193 | 1449 | 753 | 1176 | 1291 |

| Run 4 | 4426 | 1076 | 619 | 1300 | 1395 |

| Run 5 | 4145 | 811 | 674 | 888 | 951 |

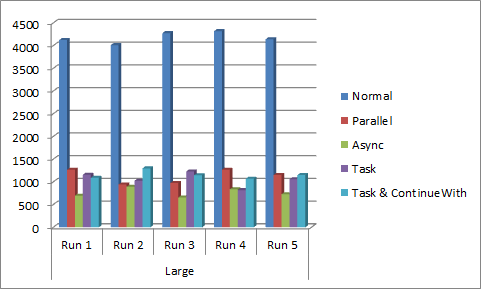

Large

| Normal | Parallel | Async | Task | Task & ContinueWith | |

| Run 1 | 4124 | 1269 | 697 | 1159 | 1091 |

| Run 2 | 4013 | 945 | 892 | 1028 | 1299 |

| Run 3 | 4277 | 977 | 657 | 1228 | 1148 |

| Run 4 | 4322 | 1270 | 840 | 820 | 1072 |

| Run 5 | 4141 | 1154 | 729 | 1059 | 1151 |

Surprisingly, irrespective of the VM size, when an operation is I/O bound, asynchronous pattern outshines all the other approaches followed by Parallel.

Final Words

Hence, the “asynchronous” approach won the I/O bound operation (shown as a diagram also here).

Let me come up with one more test which covers on which area Parallel approach will shine. In addition to these, when you have lesser I/O and want smooth multithreading, Task and Task + ContinueWith may help you.

What do you think? Share your thoughts!

I highly thank Steve Marx and Nuno for validating my approach and the results which are actually improved my overall testing strategy.

The source code is available at http://udooz.net/file-drive/doc_download/23-mailanalyzerasyncpoc.html