Always domain modeling is the vital part and nobody has second opinion about the importance of Domain-driven design. This post is about anti-corruption layer between domain objects and data persistence in the Azure world. Whenever, I am started working on object-repository framework, this famous Einstein’s quote echoed in my mind

In theory, theory and practical are the same. In practice, they are not

We have to compromise “persistence agnostic domain model” principle. This is happened even with popular frameworks ActiveRecord (Rails) and Entity Framework (.NET) too. I skip the compromise part as of now.

In Azure, you have two choices to persist objects. One is table storage and another one is SQL Azure. Typically, Web 2.0 applications use mixed approach like frequently used read-only data on NOSQL and source of truth is on relational data stores (CQRS). This would be recommended when your application is running on Cloud, because every byte is billable and metered. In this article, I brief the concerns when you are choosing Table Storage as source of truth. Would Azure Table Storage be a good choice for domain object repository? Though this is not the time to say “Azure Table Storage for domain object-repository should be highly recommended, partially or completely avoided” kind of opinions, but I can share some of the experiences with Azure Table Storage.

Due to high performance, Azure axes some NOSQL features those are typically available in other NOSQL products. This seems like to get the ambitious mileage advertised for a bike, bike companies said you should drive on such a road, wheather and load conditions.

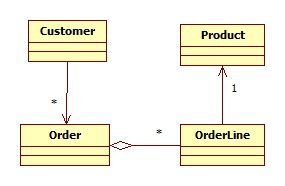

I have used the famous Customer-Order domain model in this post as shown in below figure.

The actual classes are:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | |

Is Key-Value Data Store Enough?

Key-Value based data stores are the actual starting point of NOSQL revolution, later Document based data store has been widely adopted for object persistence. Document data store has the capability to persist an object (complex data type) against a key, however Key-Value data store can persist only scalar values. This means that the Key-Value’s entity model is very much like relational representation (primary key – foreign key and link table), instead in Document data store, we have the choice to embed object into another object. In the above example, Customer’s Order object can be embedded within Customer, and OrderLine and Product would be by reference. However, Azure Table Storage is just Key-Value data store. You still have to provide meta-data for referential integrity.

Am I smart enough on “Partition Key” decision?

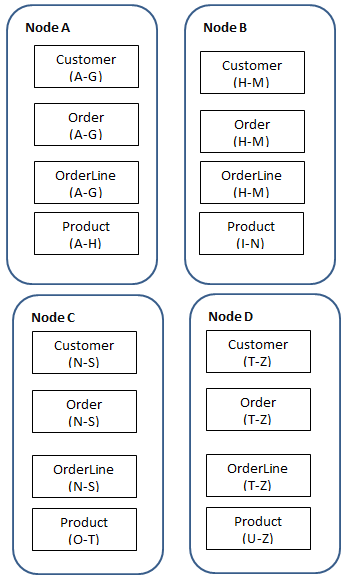

Physical location of a table in Azure (and all other NOSQL data stores too) is based on Partition Key (In Mongo DB, Sharding Key) selection. You can get the “indexing” like faster query result only when you give partition key and row key. Hence, the partition key selection is one of the architectural decision for a cloud application. How smart you are to choose the right partition key is matter here! In the Customer-Order, we can simply choose the following as partition key for the respective tables:

- Customer – first letter of Name in upper case

- Product – first letter of Name in upper case

- Order – again use the partition key of the customer, since Order is always made by a customer.

- OrderLine – either use Order table partition key or Product table partition key. If we choose Order table, the OrderLine table partition will use Customer table partition key.

The below figure depicts how these tables would be distributed in a data center with four nodes.

Now the concerns are:

Is table partitioning happening always or based on capacity? Typically, NOSQL data stores (like Mongo) starts “sharding” when running out of drive capacity in the current machine. It seems very optimal way. However, there is no clear picture on Azure table storage’s sharding.

Data store is even smarter than me when sharding. In Mongo DB, it only asks table object property or properties as as “Sharding” key. Based on the load, these data stores scales out data across servers. The sharding algorithm intelligently split the entities between the available servers based on the values in the provided “Sharding” key. However, Azure asks the exacts value in the partition key and it groups entities those have same value. Azure does not give the internals of how does partitioning happens. Will it scale-out on all the nodes in the data center? or limit to some numbers? No clear pictures though.

What will happen if entities with same partition key on the single table server run out storage capacity? Do not have a clear picutre. Some papers mentioned that table server is the abstraction and called as “access layer”, which in turns has “persistent storage layer” which contain scalable storage drives. The capacity of the drives will be increased based on the current capacity of the storage for a table server.

Interestingly, I found a reply in one of the Azure forum for a similar question (but quoted couple of years before):

…our access layer to the partitions is separated from our persistent storage layer. Even though we serve up accesses to a single partition from a single partition server, the data being stored for the partition is not constrained to just the disk space on that server. Instead, the data being stored for that partition is done through a level of indirection that allows it to span multiple drives and multiple storage nodes.Since, based on the size of data we don’t know how many actuals table servers will be created for a table. Assume, a business which needs more Orders for a limited Products and small Customers should have largely scaled out Order and OrderLine table. But, here these two tables are restricted by small Customer table. A web 2.0 company may need more products and customers which linearly have very large Order and OrderLines. If this scaled out on large number of table servers, co-location between OrderLine and Product should be important as well for Customer-Order association. If Azure table storage scale-out algorithm does the partitioning based on the knowledge of that table only, then unnecessary network latency will be introduced.

Is ADO.NET Data Service Serializer enough for the business?

Enumeration are very common in domain models. However, ADO.NET data service serializer does not serialize it. Either we need to remove enumerated properties or to write custom serializer.

Final Words

So, you can either teach me your knowings of the abvoe concerns or stack your concerns up in this post.