We are in the multi-core era, where our applications are expected to effectively use these cores. Simply, go parallel, means that partitioning the work being done into smaller pieces those are executed on the available processors in the target system. Until .NET 3.5, parallel means we are used to use ThreadPool and Thread classes. We are not in a situation to have a component which partition and schedule work into work items on different cores. A craft-and-weave parallel framework from Intel is available while for MC++ and not sure how easy it is to use.

Even though threads are basic to parallel, from a developer perspective, what required for parallel programming is not the thread, work items in a work, named “Task”.

.NET 4.0 Parallel Extensions

Task represents a work item being performed independently along with other work items. This is the approach taken by the experts in Microsoft Parallel Computing division, and released parallel extensions (Task Parallel Library and Parallel LINQ) in .NET 4.0 beta 1. As like Microsoft’s other frameworks, developers need not worry about the internals. The task partitioning and scheduling of the work items are taken care by parallel extensions.

Task and Thread

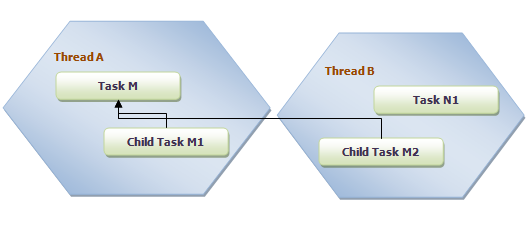

Let us first understand the relationship between task and thread. See the following figure.

Typically task is resided in a thread. A task may contain one or more child tasks those are not necessarly resided in the parent task’s thread. In the above figure, Child Task M2 of Task M resided in Thread B.

Task Parallel Library (TPL)

This library provides API to perform task based parallel programming under System.Threading and System.Threading.Tasks namespaces. The partitioned tasks are automatically scheduled on available processors by Task Scheduler which is in ThreadPool. The work stealing queue algorithm in the task scheduler makes the life easier. I’ll explain about this in the next post.

Scheduling the tasks on the processors is the runtime behaviour of the task scheduler, so it does support ‘scale up’ without recompiling the program on the target machine with few or more cores.

There are two types of parallelism you can do using TPL.

- Data Parallelism

- Task Parallelism

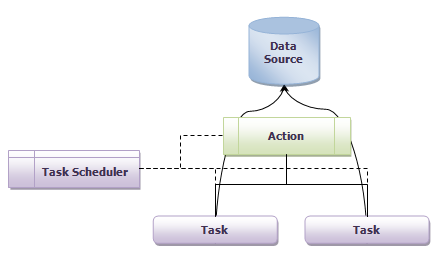

Data Parallelism

It is very common to perform a set of actions against each element in a collection of data using for and for..each. Parallel.For() and Parallel.ForEach() enable to make your collection processing actions to be parallel. The following figure depicts this.

Let us see this with typical customer-order collection. The following code shows Customer and Order declaration.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

Let us create a in-memory collection for this as like below code.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

Let us write a simple parallel code which iterate through each customers and its orders.

1 2 3 4 5 6 7 8 9 10 | |

In the above code, printing customer detail is one task and printing order detail for a specified customer is the child one for that. I’ve taken simple overloaded version of For…Each

1

| |

The task scheduler partitions the customers and orders, and schedule them into available processors.

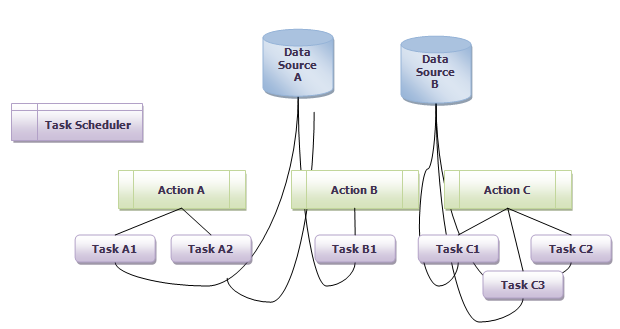

Task Parallelism

In cases like multiple distinct actions to be performed concurrently on the same or different source. The following figure depicts this. Note that task count need not be equal to number of actions.

Parallel.Invoke() is used for this. See the following code.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

I’ve taken the following overloaded version of Parallel.Invoke().

1

| |

I’ve specified two different actions those are acted on customers.

The source code for this article is available at http://www.udooz.net/file-drive/doc_details/8-net-40-tlp-demo-1.html.

In the next post, I’ll explain the more about tasks, Parallel LINQ portion and task scheduler.